Word count: 3909. Estimated reading time: 19 minutes.

- Summary:

- The post describes a project of building a video AI inferencing PC out of old spare parts and hardware from Aliexpress, using Blue Iris software for IP security camera control and analysis. The system is powered by an Intel Pentium G3258 CPU, 16GB of DDR3 RAM, and an nVidia Tesla P4 card, which provides 192 GB/sec of memory bandwidth and can do about 5.7 TFLOPS in FP16. The PC runs on a 2008-era case with a custom fan adapter to cool the AI accelerator card.

Friday 4 July 2025: 07:44.

- Summary:

- The post describes a project of building a video AI inferencing PC out of old spare parts and hardware from Aliexpress, using Blue Iris software for IP security camera control and analysis. The system is powered by an Intel Pentium G3258 CPU, 16GB of DDR3 RAM, and an nVidia Tesla P4 card, which provides 192 GB/sec of memory bandwidth and can do about 5.7 TFLOPS in FP16. The PC runs on a 2008-era case with a custom fan adapter to cool the AI accelerator card.

The problem

Almost exactly three – yes, three – years ago I wrote here about my then recent purchase of the Dahua HFW5849T1-ASE-LED IP security camera. I was very impressed – for a few hundred euro, you can now get a 4k camera which can see in full colour in a level of dark humans can’t see in. I raised two of those cameras plus a 180 degree camera on the site shortly after getting the solar panels and fibre internet installed, which is an amazing two years ago now. Those cameras have been watching over the site ever since, alerting my phone if anybody strays onto the property using their basic on-camera AI. Each camera has a sdcard, and it continually records with about three and a half days of video history. The total power consumption for the site is 78 watts, much of which goes on those cameras (I know the 54v DC power supply draws about 50 watts, but that also goes on the PoE networking equipment and the fibre broadband).

This low power draw means I get continuous operation even with the Irish weather for ten months per year, with maybe 67-75% service during Dec-Jan depending on length of run of short overcast days. For the power budget, I cannot complain. However, if I did have more power budget:

The AI on the cameras runs on (at best) an internal resolution of about 720 x 400 by my best guess. This works fine when objects get within maybe twenty-five metres of the camera, but anything further out won’t trigger as there just isn’t enough resolution.

The AI on the cameras isn’t bad for what it is, but it also likes to think all black animals are cars. Black cats, black birds, anything black triggers it. This is annoying at 4am.

The 3.5 days of storage time is a touch limiting. You really want several weeks as a minimum.

Reusing decade old hardware

As I am me, I have a whole bunch of old to ancient spare computer parts lying around, so around this time last year I put together the least old of those old parts to see if they had any chance at all of running Blue Iris, which is a long standing reasonably priced software solution to IP security camera control and analysis. As it is a Windows program, I needed to run a dedicated Windows box in any case, but I didn’t really want to spend much money on this until after the house was raised. The base hardware specs of this ancient kit:

- Intel Pentium G3258 which was an ultra low budget dual core Haswell part able to reach 3.2 Ghz. Haswell is a 2013 era design, but it was the last truly improved new CPU architecture from Intel i.e. it doesn’t suck even today. One thing to note is that the G3258 as an ultra budget chip has AVX artificially disabled. This causes some AI software to barf at you, but a lot of software will work with just SSE surprisingly enough.

- 16Gb of dual channel DDR3-1333 RAM giving ~20 Gb/sec of memory bandwidth.

- ASRock H97M-ITX motherboard (the original use for this PC when new was within a 12v powered mini-ITX case).

- Sandisk SSD Plus 240 Gb SATA SSD from about year 2015. I remember picking it up super extra cheap in an Amazon sale and I didn’t have high hopes for its longevity, but it ended up proving me wrong.

I tested this last summer leaving it run for several months, and found that if I reduced the resolution from the cameras sufficiently, Blue Iris did work sufficiently okay that I felt confident in investing money into more of this solution. As the daylight hours began to shrink, I removed this additional power load and then, to be honest, it kinda got backburnered for a while.

Two things then changed:

I saw on a HackerNews post that the Chinese had started dumping their ten year old AI hardware onto Aliexpress for cheap as they cleared out parts they were retiring. Unlike previous AI acceleration hardware, the ten year old stuff (nVidia Pascal) isn’t too far from the modern hardware i.e. modern CUDA programs run just fine on Pascal, just slower. A cheap enterprise AI accelerator card would be game changing for realtime video analysis.

Thanks to recent economic uncertainty about the future, the price of high capacity enterprise hard drives cratered to firesale prices. I ended buying the only brand new part in this project – a Seagate Exos 28Tb enterprise hard drive – delivered for €400 inc VAT and delivery. Just a few months ago that drive cost nearly a grand. Madness.

I picked up an 8 Gb nVidia Tesla P4 card from Aliexpress for €125 inc VAT and delivery. It turned out to be brand new, never used, and it even came with its additional low profile bracket. This is a 2016 era card and it has 192 Gb/sec of memory bandwidth and can do about 5.7 TFLOPS in FP16 for under a 75 watt power budget. For the money, and for the power budget, this is a whole lot of AI acceleration – even brand new 2024 hardware would get about the same memory bandwidth and maybe twice the compute if constrained to a 75 watt power budget. And it would cost several times what this card did!

The nVidia Tesla P4 card – and indeed its whole generation of AI hardware which lacks tensor cores – isn’t well suited for running large language model AI (i.e. chatbots). But I ran a variety on 8b LLMs on it out of curiosity and it does about 18 tokens/sec until thermal throttling kicks in, whereupon you get about 12 tokens/sec. You could of course fit more cooling (you’ll see my ‘fan solution’ below), but ultimately this generation of GPU on its generation of CPU is going to be far slower at LLMs than even a recent CPU on its own. I’ll put this another way – my M3 Macbook Pro laptop handily beats the Tesla P4 for LLM performance and my Threadripper Pro workstation blows far past it. The latter has less memory bandwidth than my Macbook, but LLMs especially newer ones need more compute, and that is exactly what the Tesla P4 (relatively speaking) lacks.

All that said, while ten year old GPUs aren’t great for running LLMs, they absolutely rock image analysis which is the kind of task that generation of hardware was designed for. Identifying objects, tracking facially recognised people in crowds, 3D spatial reconstruction from multiple images – this generation of AI hardware absolutely aces those tasks as you will see later.

Finally, there was a little compatibility issue with the Seagate Exos drive and this ASRock motherboard – the motherboard doesn’t wait long enough for the hard drive to respond, and so on boot it sometimes doesn’t find the drive. I trial and errored BIOS options to slow down the boot enough that it now finds the drive 95% of the time, and if it doesn’t find it then a soft reboot fixes the issue as it avoids the drive doing a cold start. Just something to be borne in mind when pairing really new hard drives with ten year old motherboards.

The build

This is the oldest PC case I still have. It is from around 2008 I believe. It was once the TV PC for myself and Megan back a very long time ago – myself and Johanna had a TV PC in our St. Andrews house, and I think when we left I gave it away as I wanted to replace it with modern hardware, and this case was what enclosed that modern hardware (I distinctly remember with grimace the first motherboard in that new TV PC, it was a Gigabyte branded model with AMD CPU and it cost me enormous amounts of time due to quirks and I ended up junking it for an ASRock motherboard and Intel CPU out of frustration). The case, and especially its power supply which got extracted and used for other things, carried on.

In Canada, we bought Android TV pucks just before we came back to Ireland having not used a TV PC in Canada, so this case has certainly not been in use since 2011 or so. I have been keen to put it back into use as it is one of my most favourite cases – partially because it is especially well designed, it was ludicrously cheap and even came with a power supply for the money. But I really like how tacky and garish it is. It was the perfect looking TV PC with its piano shiny black plastic with red and gold plastic. In case you’re wondering why there is tape on the front, it’s because the hard drive light is so ultra bright it’s very annoying. So we taped it over with two layers to dull down the brightness. You might notice that with this coming back into use now I took the opportunity to fit a USB3 5.25” adapter, as the motherboard is new enough to supply USB3 and I might want to copy video or something large one day. The 5.25” insert cost something like €13, it seemed a wise upgrade for this case as absolutely nobody uses a CD-ROM drive anymore, and as you may notice it can also house a 3.5” hard drive which is exactly where I mounted the Exos hard drive (there are two dedicated 3.5” bays above, but they are horizontally mounted and I wanted this drive vertically mounted as it gets rather warm – see below).

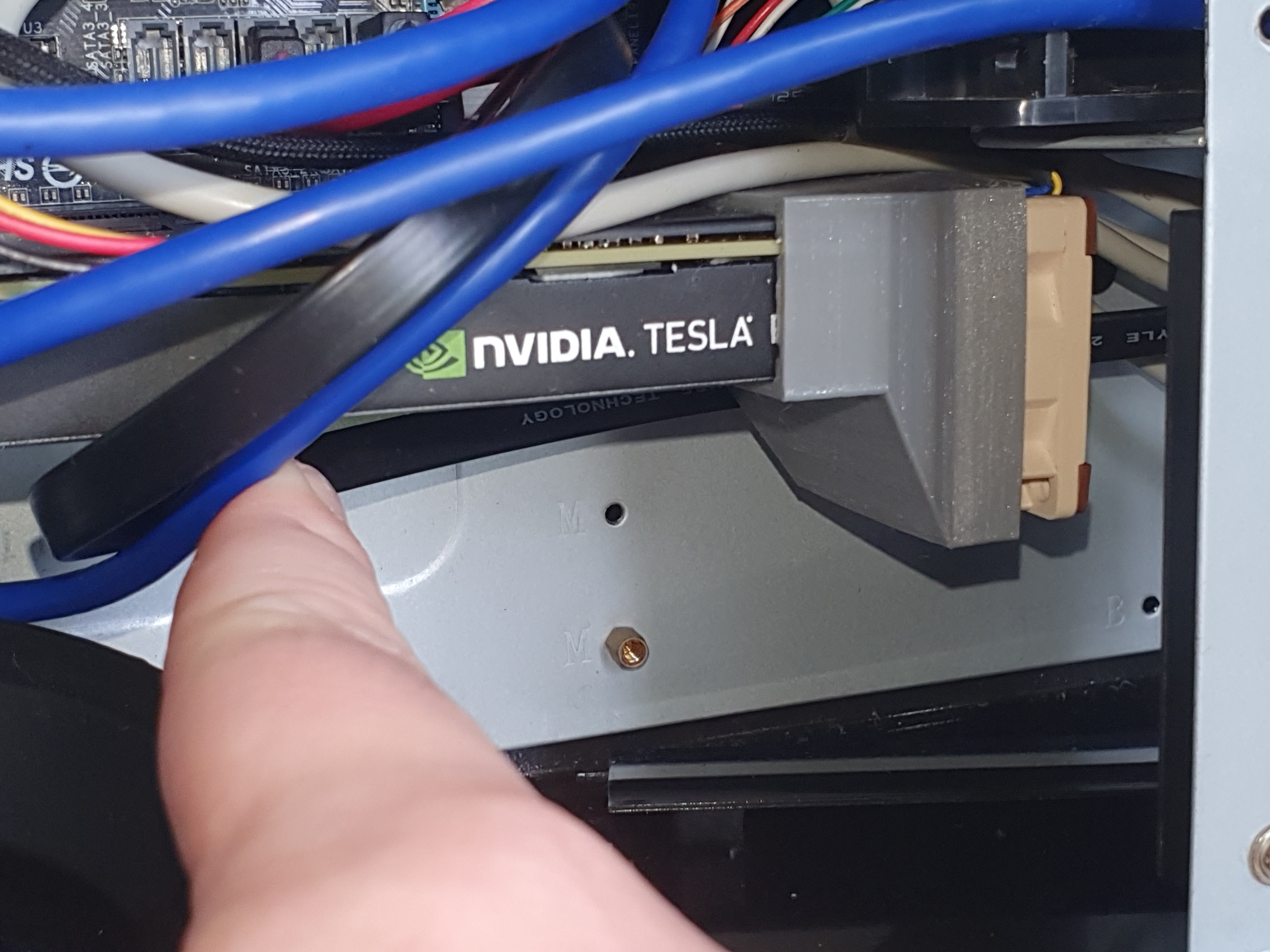

The mini-ITX motherboard has only one PCIe slot and it’s far too small for the case. But no matter – this case has enough space to fit my 3D printed fan adapter, which is my custom solution to how to cool the AI accelerator card, because enterprise cards don’t cool themselves like consumer cards, they expect cooling to be supplied to them:

I got the design for the adapter off the internet, but I had to do a fair bit of customisation to get it to fit into the smaller original case I had in mind for this project. But eventually it was just easier to break out this much too large case, and then not sweat trying to get everything to find inside a small space. Had I bitten the bullet sooner, I could have saved myself several days of my free time … but no matter, it’s all built and working now.

At the end is a Noctua PWM fan so it doesn’t blast at full speed all the time. I chose a speed where it isn’t loud enough to be annoying, which isn’t fast enough to run LLMs without thermal throttling, but it is plenty fast enough to analyse video as we shall see.

Finally, I put in a watt meter to aid tracking power consumption of this specific PC:

That says 84.8 watts incidentally. I think the CPU + motherboard probably consumes about 30 watts and the AI accelerator about 48 watts when it’s in use. With the fan speed I choose, the hottest part of the AI accelerator sits at about 60 C, which seems fine to me.

From the perspective of the inverter, the whole site now uses 158 watts, which if it used 78 watts before, means that this AI PC takes 80 watts on average over time. That, as with last year, will limit how long I can leave this box running with the current number of solar panels – once the days begin to shorten, I’ll have to remove it once again.

(In case you’re wondering how the maths works there, the reason more load uses proportionately less power is because conversion efficiency is lousy at low loads, so as the load rises you get less of a load increase that you would expect)

Blue Iris

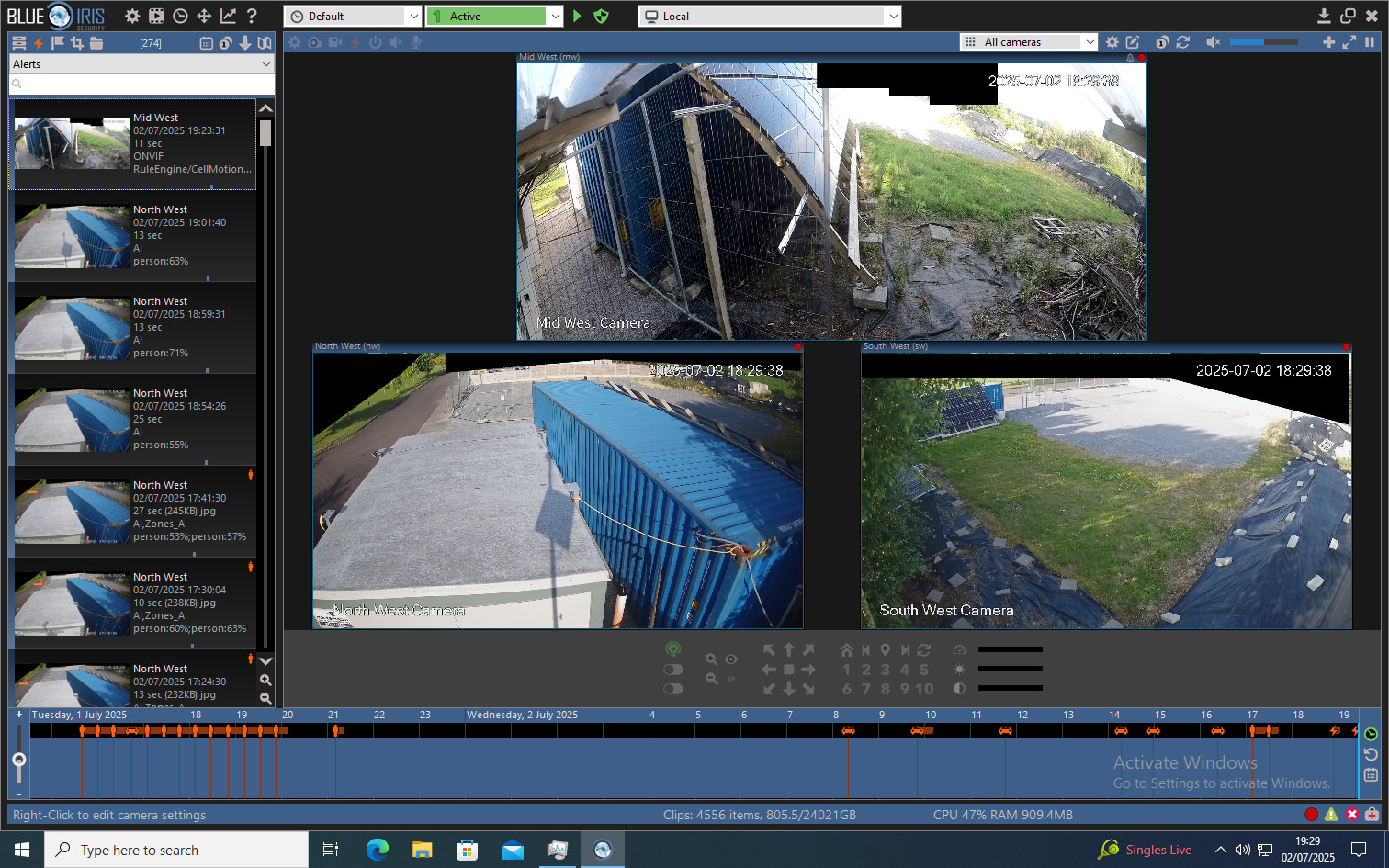

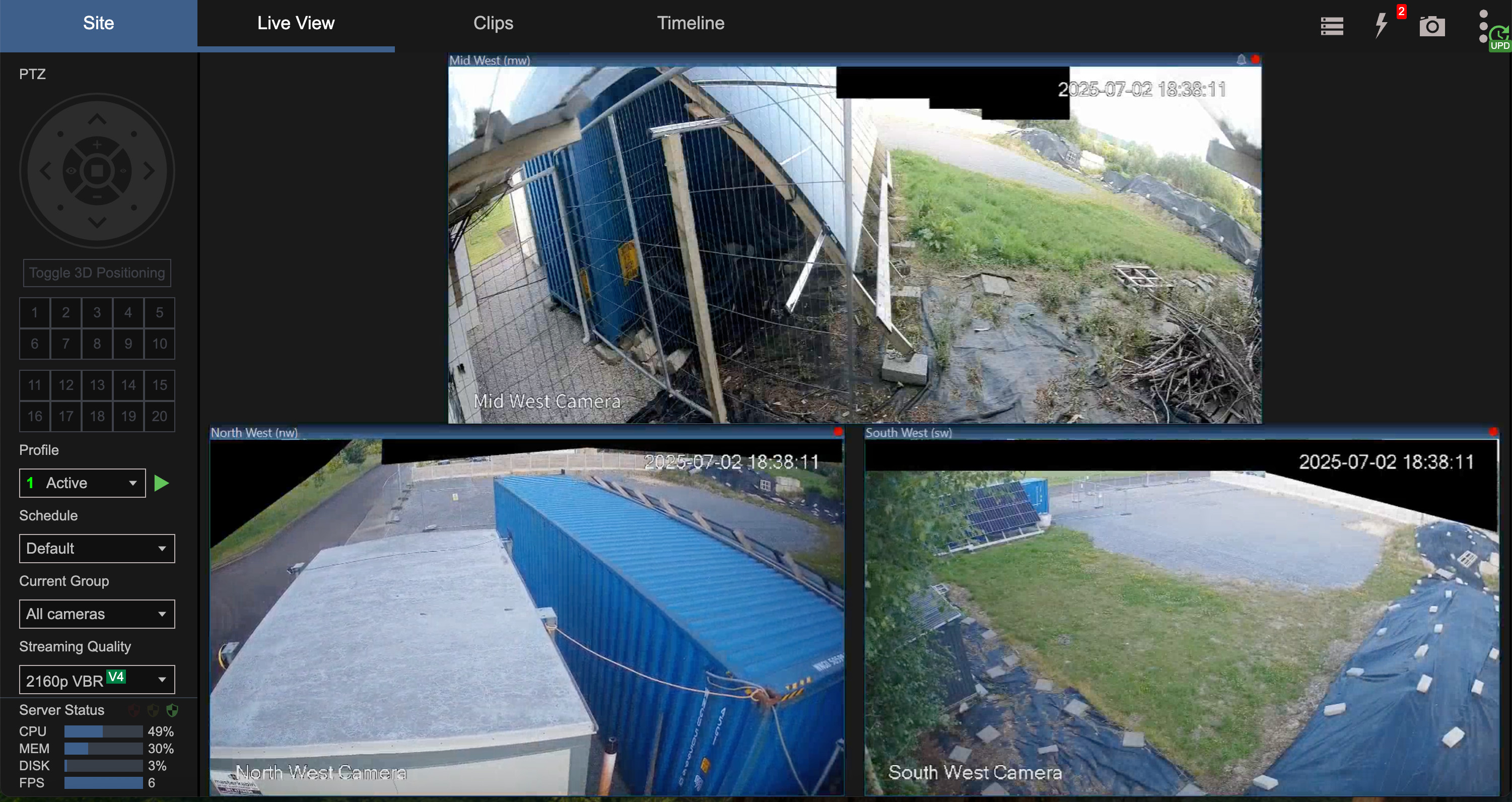

Blue Iris has a Windows based UI, but also a convenient web based UI so you don’t need to remote desktop in:

Blue Iris is extremely configurable, and it has taken me several days of twiddling before I became happy with the results. Unfortunately, Haswell era CPUs can only decode in hardware h.264 video up to 1080p. After that, it’s all software decoding. Hence three 4k resolution h.265 feeds @ 7 fps causes the CPU to peg to 100% and analysis begins to fall behind. The GPU barely breaks a sweat with 4k images, analysing each in about 120 milliseconds, and runs just above its lowest power state above idle at about 48 watts (absolutely idle is about 7 watts, incidentally, but as soon as the card gets connected to by any software power consumption jumps to 35 watts or so).

The cameras can emit a lower resolution substream for ‘free’, and Blue Iris can be told to use that substream for the AI inferencing whilst otherwise using the 4k stream for everything else e.g. storage to disc, browsing etc. Given that the Haswell CPU can’t hardware decode better than 1080p h.264, I chose exactly that for the substreams and three streams of that uses about 26% of CPU. Unusually (see below), I send them immediately to the GPU and do as little CPU processing as possible. The nVidia Tesla P4 takes about 45 milliseconds to process a 1080p image, and Blue Iris asks it to do so every second per camera. Therefore if this PC were newer hardware, this card could happily analyse twenty 1080p cameras which is good to hear, as we shall be eventually fitting eight external cameras and four internal cameras. If it could decode h.265 in hardware, maybe even eight 4k streams could be doable.

In Blue Iris terms, the current three camera setup runs at 34.5 MP/s which simply adds the bandwidths of all the cameras together. That would imply a maximum of ~150 MP/s if maxed out. I read online in forums that this old hardware should max out at around 800 MP/s albeit with AI disabled, however this CPU doesn’t have AVX and it has especially small CPU caches. Also, surely sending everything to AI immediately is expensive as it implies many more memory copies – plus I note that the AI backend is written in Python, and it eats 6% of CPU just by intermediating between the CUDA on the GPU and the main Blue Iris program.

You can, of course, tell Blue Iris to only invoke the AI if something in the picture changes. However, two of the cameras are on poles and the wind makes them wobble, so the motion detection is always being activated which means the AI is always getting called anyway. Turning off the motion detection prefilter therefore reduces overall CPU.

Storage

Blue Iris appears to write to the disc at about 0.9 Mb/sec per 4k h.265 stream. For a 28 Tb drive, of which 24 Tb is probably available for writing, that is 75 Gb/day/camera which means one camera will fill that drive in 325 days, two cameras in 162 days etc. It should take 3.5 months for the current three cameras to fill that drive, but about four weeks for twelve cameras. This is exactly why I splurged now for precisely a 28 Tb hard drive – four weeks of recordings I reckon is exactly what I’m looking for, and the first commercial release of HAMR technology hard drives is probably going to have been overengineered for durability. I guess I’ll find out.

HAMR drives generate a lot of heat when writing due to the laser assisted writing – when I was soak testing it, the write only stage generally took the drive up to a toasty 65 - 70 C whereas the read only stage kept the drive at 28 - 32 C or so. I didn’t have forced air on it, but the drive was open to the air. At 0.9 Mb/sec write speeds and in a case with at least some forced airflow, and the drive mounted vertically to aid heat being driven off of it, the drive sits at 43 C or so – I guess that laser remains turned on for long enough even at low rates of writing to generate a fair bit of heat. Heat, of course, equals additional power consumption and it is unfortunate that for my future house we shall be adding so much additional waste heat. Non-HAMR drives might only be +5 C for sustained writing, but non-HAMR capacities topped out at 18 Tb for Seagate so I guess the increased write power consumption is now here to stay.

How good is the AI?

The AI is Codeproject.AI which lets you configure which backend you prefer. After much testing, I settled on YOLOv8 though with a custom IP camera detection model loaded, as the default one generates far too many false positives e.g. finding that concrete blocks are trucks etc.

To date, with the custom model, I am impressed! It detects people at the very furthest extent the camera can see where in 1080p they are only maybe a dozen pixels high. It detects vans and cars and people which are obscured behind the cabin or shipping container. As a result, it triggers far more frequently than the on-camera AI does. But in the last few days of testing it, to date it hasn’t had any false positives except during sunrise (false negatives are, by definition, considerably harder for me to notice).

The sunrise false positives are for the middle camera only, and I think they’re due to how the light makes the complicated view look to the camera. I’m sure it’s fixable with a bit of tweaking the settings.

How do you get notified?

The camera built-in AI has an integration via its manufacturer’s Android app and a server somewhere in China. Blue Iris does also have an Android app and a cloud push server for an annual subscription, but internet feedback reckons it isn’t much good. Instead, they think wiring it into Pushover the best course of action, and there is a ‘quick start’ tutorial at https://ipcamtalk.com/threads/send-pushover-notifications-with-pictures-and-hyperlinks.58819/ which is nearly cut-and-paste easy.

In next to no time, the Pushover Android app was pinging me with a high resolution photo anytime absolutely anything at all involving a human or a vehicle occurs. I can’t complain about the accuracy nor push notification latency – indeed, I’m surprised how well it does given the downsampled 1080p input.

What’s next for this project?

Down the line, I will want to add facial recognition so the thing doesn’t go mad with alerts every time I or my family are on the site. Also, I’d like to add 3D spatial reconstruction – this is where some CUDA code takes several camera images of a scene from known vantage points, and from that reconstructs the 3D spatial location of objects. For this to work, you ideally need three cameras and no less than two cameras pointing at the same place from very different locations which I don’t currently have, so there isn’t much point in setting that up right now. Down the line however, I have deliberately designed the house camera layout so images overlap and the AI can reconstruct locations.

The reason we want the AI to know where people are is principally so lighting can be dynamically adjusted upwards and downwards based on whether humans are near a thing. So, ideally speaking, the outdoor lighting would all normally be very dim saving power and not disturbing nature. If a human – but not an animal – is close to a particular wall, the AI would brighten just that portion of the outdoor lighting. This wouldn’t just save on power, I think it would have an excellent burglar deterrence effect too because clearly something is aware of your presence.

You may remember that I originally planned to use Time of Flight sensors or Infrared sensors for this. But they’re a whole load more wiring work, and need a whole load more ESP32s to be placed around all of which then need more power, more ethernet wiring etc. As security cameras were going to be installed in any case, if they could be used to avoid all those sensors, that would be a big win. The concern was what the power consumption hit would be, and now that question has been answered.

Anyway, all that is well down the line, and I now have proven that a low power AI accelerator will do the job very nicely. So that’s another todo item ticked off for now, and another project which can be put into hibernation until the house gets built.

What’s next for this summer?

In terms of what’s next for my remaining summer, as you have probably noticed I have been making great progress at advancing my many projects and writing up my notes on them here. I have three projects awaiting testing and writeup here remaining:

- Differential pressure sensor testing.

- Thermally broken kitchen extractor testing.

- Radar human presence sensor testing.

The first I’ve mentioned here several times before. The second is to see if a Passive House grade of insulated kitchen extraction vent can be created – I’ll be empirically testing this using Megan’s hair dryer and a thermal camera. The third is a new type of sensor recently available on Aliexpress – unlike Time of Flight sensors which work on the basis of analysing the scattering of an infra red laser light, these sensors work on the basis of analysing the scattering of radio waves. Supposedly, they can tell you if (a) a human is present (b) if that human is standing, sitting or lying down and (c) they have a range of about five metres. If they really can tell human position accurately, they would solve my ‘bedroom problem’ because I can’t fit cameras inside bedrooms, so a sensor which can tell if somebody is in a bedroom, and whether they are sleeping or not, would be very handy.

We ended up purchasing a Fiido D11 2025 edition for Megan’s commute bike, so I’d expect a short post here on that at some point.

I’ve been and returned from my final ISO WG21 C++ standards meeting in Bulgaria, and I have cranked out four papers for the next ISO WG14 C standards meeting. So that’s all done.

The trips to Amsterdam and London have been planned and booked. This Monday I’ll even get cleared another unpleasant todo I’d been putting off, a colonscopy.

There are two things I have not made good progress upon so far:

- Losing weight (I remain at 84 kg despite substantially reducing my caloric intake and increasing frequency and duration of exercise).

- Complete the 3D services layout plan, which I ‘mysteriously’ keep finding excuses to not do because I dislike doing it so much.

I’m sure that as the low hanging more enjoyable fruit gets harvested, I’ll have ever fewer excuses to not get moving on the less enjoyable work. Here’s hoping!

| Go to previous entry | Go to next entry | Go back to the archive index | Go back to the latest entries |